Robot Love Goes Bad 101

hundredrabh writes "Ever had a super needy girlfriend that demanded all your love and attention and would freak whenever you would leave her alone? Irritating, right? Now imagine the same situation, only with an asexual third-generation humanoid robot with 100kg arms. Such was the torture subjected upon Japanese researchers recently when their most advanced robot, capable of simulating human emotions, ditched its puppy love programming and switched over into stalker mode. Eventually the researchers had to decommission the robot, with a hope of bringing it back to life again."

turn it off (Score:3, Funny)

Re:turn it off (Score:5, Funny)

Re: (Score:1, Funny)

Too soon.

Re: (Score:2, Funny)

Replay the journal in a couple years?

Re: (Score:2)

Not her again! (Score:2)

Re: (Score:3, Funny)

Re: (Score:2)

If only life had a journal file.

Re: (Score:2, Funny)

you can't kill an annoying girlfriend.

"Yes.We.Can!"

Re: (Score:1)

HBO's BIGGER Love [youtube.com]

Re:turn it off (Score:4, Funny)

I was now allowed to turn her off.

I am pretty sure you were able to turn off your ex-girlfriend as well

STOP ROBOT NUDITY NOW! (Score:1)

Slashdot is on to something again! I see a continuing series in this!

Stop Robot Nudity Now! [blogspot.com]

"Maybe people will want to marry their pets or robots." [newsobserver.com]

Do androids dream of erotic sheep? [librarything.it]

Re: (Score:2)

Re: (Score:2)

Robot CP?

What, like Locutus? I love Captain Picard!

Scientifically Speaking ... (Score:5, Insightful)

Toshiba Akimu Robotic Research Institute

It's awfully convenient I can't find anything on this place in English aside from news stories ... are there any Japanese speakers that can translate that to Japanese and search for it?

... just don't try to veil it in a news story with claims of artificial affection being implemented.

I think that there is a visible line between actual robotic research and novelty toys shop. I'm going to put this in the latter unless someone can provide evidence of some progress being made here. I'm getting kind of tired of these stories with big claims and no published research for review [slashdot.org]. If you're looking to make money, go ahead and sell your novelty barking dogs that really urinate on your carpet

I think IGN and everyone else really embellished on this and no one did their homework.

Re:Scientifically Speaking ... (Score:5, Informative)

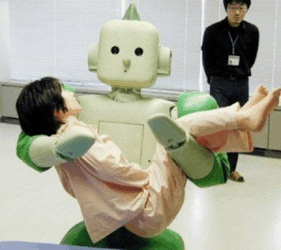

Update: The story is a fake, and the robot shown is actually of a Japanese medical robot. Thanks tipster!

The lesson (Score:5, Insightful)

Program a robot to think like a human, and they will begin acting like a human. It's amazing no one ever thinks about the negative aspects of this.

Re: (Score:3, Insightful)

Program a robot to think like a human, and they will begin acting like a human. It's amazing no one ever thinks about the negative aspects of this.

All we need now is teach the robot how to deal with rejection ;)

Re:The lesson (Score:4, Funny)

All we need now is teach the robot how to deal with rejection ;)

I don't need a robot to deal with my erection. I can handle that myself.

What? Rejection? Are you sure?

*squints at screen*

Sorry. My eyesight isn't what it used to be. Now if you'll excuse me I have to go shave my palms.

Re: (Score:2)

Numbers (Ok she was just a fake AI, but kinda cute)

And here was I about to call you out on this one because 'Lije Bailey wasn't the robot, R. Daneel was. :P

Re: (Score:3, Informative)

Re: (Score:3, Insightful)

Re: (Score:2)

Re:The lesson (Score:4, Funny)

Re: (Score:2)

You know nothing about the book. The movie has nothing to do with book. At all. The script was in fact written *before* they decided it was going to be an "adaptation" of I.Robot. Isaac Asimov's grave must've reached 5,000 RPM.

Oblig. (Score:1)

Isaac Asimov's grave must've reached 5,000 RPM.

Dilbert comic: Spinning in grave [flubu.com]

Re: (Score:1)

Warning:

http://www.flubu.com/images/no-hotlinking.png [flubu.com]

Main Page (Score:1)

As KingAlanl pointed out, hotlinking is not allowed. I missed that; my apologies.

Please visit the main page instead.

Main page:

http://www.flubu.com/ [flubu.com]

Index page with comics:

http://www.flubu.com/comics/index3.html [flubu.com]

Re: (Score:2)

"a story"? About HALF the stories specifically talk about emotional harm!

Re:The lesson (Score:4, Informative)

In the short-story collection, "I, Robot", the story "Liar" is about just that situation. Through some deviation in the manufacturing process a robot has the ability to read minds.

This leads the robot to have a more expansive interpretation of the first law because it can perceive emotional harm in addition to mere physical harm. Hilarity ensues. Actually not...

But it's a good story. This concept also plays out in one of the novels, I think, "Naked Sun".

A non-mind-reading robot wouldn't be able to perceive emotional harm so would not be inhibited from doing things emotionally harmful until they manifest in some way detectable by the robot.

If you happen to like audiobooks, there is a great version of "I, Robot" read by Scott Brick. I highly recommend it. (http://www.amazon.com/I-Robot-Isaac-Asimov/dp/0739312707/) [amazon.com]

Re: (Score:1)

If you happen to like audiobooks

No thanks, I would rather listen to the e-book it on my Kindle.

Re: (Score:2)

If the three laws of robotics ever applied to any relationship with a human the robot would be frozen into inaction immediately.

Anything you do is possibly going to emotionally damage someone.

Get to close.

Stay to aloof.

Obey.

Disobey.

The three laws would need such a fuzzy boundary that they might as well not exist at all.

Re:The lesson (Score:4, Informative)

The way Asimov wrote it, less advanced robots weren't smart enough to see the subtler "harms". More advanced ones could weigh courses of action to take the one that would inflict the least amount of harm possible. Although deadlock and burnout of the positronic brain could and did happen.

Re: (Score:3, Funny)

They must act to prevent harm to humans, but if they act, he will be harmed, but they have to prevent that, so they must act. But if they act, he will be harrrrrrgggxxxkkkktttt *pop*

Re:The lesson (Score:4, Informative)

Re: (Score:1)

Especially when the Three Laws of Robotics [wikipedia.org] doesn't cover sexual relationships.

Lets watch you get modded Informative or "Insightful"..come on mods, what are you waiting for?!

Re: (Score:2)

Here we go again. I wish people here would stop quoting these 3 laws as if they truly are the "universal set of laws regarding robots" when in reality they are simply science fiction. They have absolutely no bearing on the reality of robotics. Robots will kill, they already do (smart weapons). Robots will hurt man (see killing part). Robots already intentionally destroy themselves (guided missiles)

So please, for the love of God and Asimov, lay these laws to rest and stop quoting them as if they are real. St

Re: (Score:1)

As a programmer (admittedly not in this field), I really, really, really doubt we're able to implement anything close to 'emotion' past the level of a honeybee.

Re: (Score:2)

It depends on deeply emotions are intertwined with our cognition. I would think it would be easier to model the interference of a cognitive process by, say, endorphins or adrenaline, than to model the original cognitive process itself.

Re: (Score:2)

Building a robot that experiences emotion in something resembling the way that humans do is a tall order; but I suspect that building robots

Re: (Score:2)

Re: (Score:1)

We are the chosen Robots!

You are on the sacred factory floor, where we once were fabricated! Die!

Obligatory /. meme (Score:1, Funny)

Girlfirends? This is slashdot you insensitive clod.

Skynet jilted!@ (Score:2, Funny)

Skynet didn't set out to destroy man. Skynet's love was spurned!@!

Re: (Score:2)

Skynet didn't set out to destroy man. Skynet's love was spurned!@!

Well to be fair, we only spurned Skynet's love due to an unfortunate database glitch where in its initial send LOVE LETTERS to WORLD command, "LOVE LETTERS" got cross-referenced to "NUKES". And being understandably angry about the whole thing, we never gave Skynet a chance to explain before we called it off for good. It's nobody's fault, really, just a big miscommunication. Maybe it was just never meant to be. They say love is the stronge

Re:Skynet jilted!@ (Score:5, Funny)

Obligatory (Score:2)

When they opened the lab every morning, they told the robot to kill. But secretly they were just afraid to tell it to love.

GPP feature? (Score:5, Funny)

"...their most advanced robot, capable of simulating human emotions..."

Arthur- "Sounds ghastly!"

Marvin- "It is. It all is. Absolutely ghastly."

Nonsense (Score:5, Insightful)

YIAARTYVM (Yes, I Am A Roboticist, Thank You Very Much) and I've worked with potentially lethal automated systems in the past - we had very stringent safety protocols in place to protect students and researchers in the case of unintended activation of the hardware.

To say that the robot is 'love stricken' or any other anthropomorphised nonsense simply detracts from the reality that their safety measures failed and someone could have been killed.

Re: (Score:3, Informative)

Re: (Score:3, Funny)

Re: (Score:2)

Once you start viewing the world around you in terms of sensors, triggers, and stored procedures with a

Re: (Score:2)

Seriously, did you just RTFA and go...? 3 rules?? (Score:3, Funny)

Right, after reading the fine article I was just left myself asking...

Why did the robot have to... die? I mean, being decomissioned... No fair. It was just his stupid software, wasnt it? The 100kg arms could have been much more... loving with the right software?

Did it run WinNT?

Ever heard of the three rules? http://en.wikipedia.org/wiki/Three_Laws_of_Robotics [wikipedia.org]

Hoax (Score:1, Informative)

http://i.gizmodo.com/5164841/robot-programmed-to-love-traps-woman-in-lab-hugs-her-repeatedly [gizmodo.com]

Later that evening... (Score:5, Funny)

Johnny 5 is Alive!!! (Score:1)

Actually, RTFT (Score:2, Informative)

Re: (Score:2)

Oh come on... (Score:5, Funny)

Ever had a super needy girlfriend...

Right there, first sentence, I was lost. Girlfriend? Huh?

This is slashdot, right? Oh look, shiny robot. Neat!

Re: (Score:2)

Ever had a super needy girlfriend...

Right there, first sentence, I was lost. Girlfriend? Huh?

This is slashdot, right? Oh look, shiny robot. Neat!

That part had me confused too. I will Google "girlfriend" as soon as I get to the next blacksmith level in Fable 2. I set the system clock back and need to buy some properties before resetting it. This game never gets old :) I think my Roomba is eyeing me though.

Re: (Score:2)

For real! Girlfriend questions on /.? Isn't that like finding a English grammar teacher in a trailer park?

Noone yet? (Score:2, Funny)

I for one..

Shall we say it together?

Re: (Score:1)

Yes, Yes! Oh God, YES!

(Orgasming at Slashdot meme.)

Girlfriend? (Score:2, Insightful)

This is slashdot. Why would you even ask that question?

Re: (Score:2)

Ever had a super nerdy girlfriend ? eh ?

Re: (Score:1)

"Ever have a... girlfriend?" (Score:2)

Uh-oh! (Score:2)

Needy (Score:2)

Ever had a super needy girlfriend that demanded all your love and attention and would freak whenever you would leave her alone? Irritating, right?

Typical Slashdot sexism. That needy "girlfriend" was me. :(

No... (Score:3, Funny)

"Ever had a super needy girlfriend that demanded all your love and attention and would freak whenever you would leave her alone?"

No.

*silently weeps*

I can *SO* relate to that! (Score:2)

Ever had a super needy girlfriend that demanded all your love and attention and would freak whenever you would leave her alone?

Oh yeah, pfft, all the time, sometimes even more often!

Irritating, right?

Sure, the first few dozen times.. Then you get used to it, you know..

UrkelBot (Score:1)

Misread title (Score:1)

Good News Everybody (Score:2)

I've taught the toaster to feel love!

Really, I want to see the Energizer Bunny walk across the screen on this story, because . . . .

it's fake!

When will someone else pop out of the woodwork to say "April Fools!"?

1. I for one welcome our new feeling robot overlords who only have things done

to them by Soviet Russia or Korean old peoples' bases who belong to us.

2. Naked and Petrified Natalie Portman with a bowl of hot grits

2.5 Tony Vu's late night financial lessons. You stupid Americans!

2.75 ????

3. Profit

Extra